How to successfully set up a dedicated development team model

The pandemic has demonstrated how in-house business teams can turn out insufficient once out-of-line working conditions hit. That is exactly the reason why a team model is increasingly being used to support workflows where highly skilled technicians are readily available “on-demand”.

Not every company has employees specialized in IT, so they often hire such staff from outside. But how can you increase your potential with a third-party talent and reduce overall costs?

In this article, we answer that question and more by focusing on the benefits of a dedicated software development team tailored to your requirements. Moreover, let’s figure out where and how to find and hire such a team, and how to effectively manage it.

Looking for people to compose a team? Hire dedicated developers!

What is a dedicated team model?

A dedicated development team is a special model of collaboration between a client and an outsourced team that is entirely focused on the client’s project until its completion.

Thus, you may get specialists you never had and may never have amongst your existing staff. And yet, they are not your full-time employees while they perform tasks with full in-house-like dedication, but remotely. So, technically, they are your employees on-demand.

Both the provider and the client may control how a remote team works. Clients usually pay for such services on a monthly basis plus some administration fees for coordination. And the great thing here is you don’t have to purchase specific software and hardware or worry about administrative, personnel, tax, and social issues like you do when you’re fully in-house. By the way, by delegating your project execution to the dedicated team, you can focus on more important business tasks.

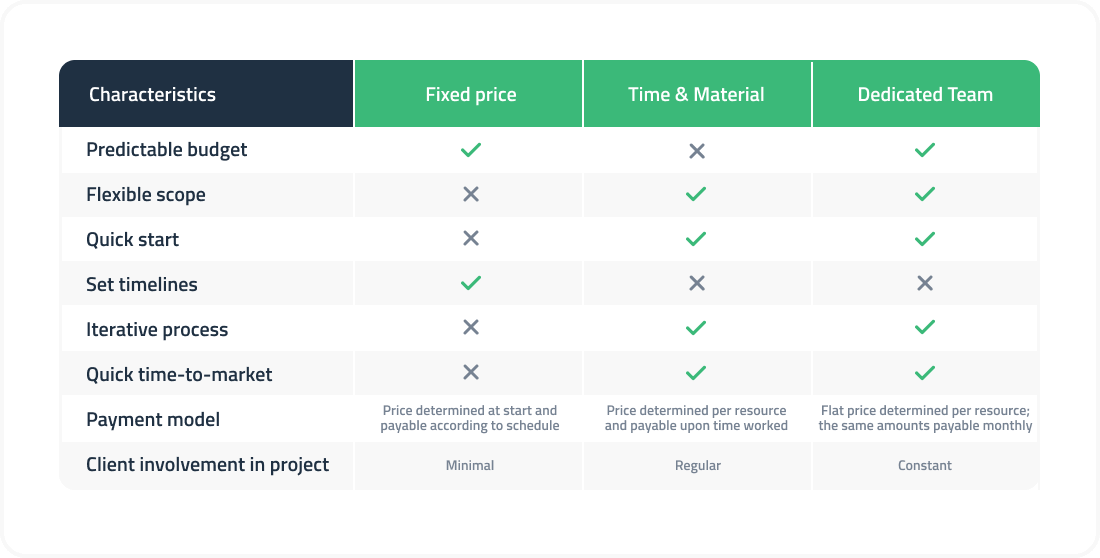

How is a dedicated resource model different from other engagement schemes?

Surely, this is not the universal interaction scheme in the IT industry. There are other models, but are they good for your particular case?

Time & Material

Usually, clients prefer “Time & Material” when it is difficult to determine the conformity of the product to the market, the time frame for the implementation, and the life cycle of the project. To make things simple, the project flow is divided into iterations with a certain duration. As a result of each iteration, the client gets a certain version of the product.

The nuance here is that the final budget and timing of each project stage cannot be defined in advance, which is a significant drawback of this model. It also does not guarantee that you will always work with the same team.

Fixed Price

This model is effective when it is known exactly what the product should look and work like in the end. The budget is defined before the start of the project workflow and its size doesn’t depend on the hours worked or the amount of work performed. All costs in excess of the estimate noted in the contract are paid by the service provider. This advantage of this model makes it the preferred choice for small projects with clear requirements. However, it is not suitable for fintech or blockchain projects as well as for quick product releases to the market.

The main risk here is hidden costs. Sometimes, you cannot accurately predict the time and scope of work in advance. And you will have to pay the amount agreed in the fixed price contract, even if the work has not been completed.

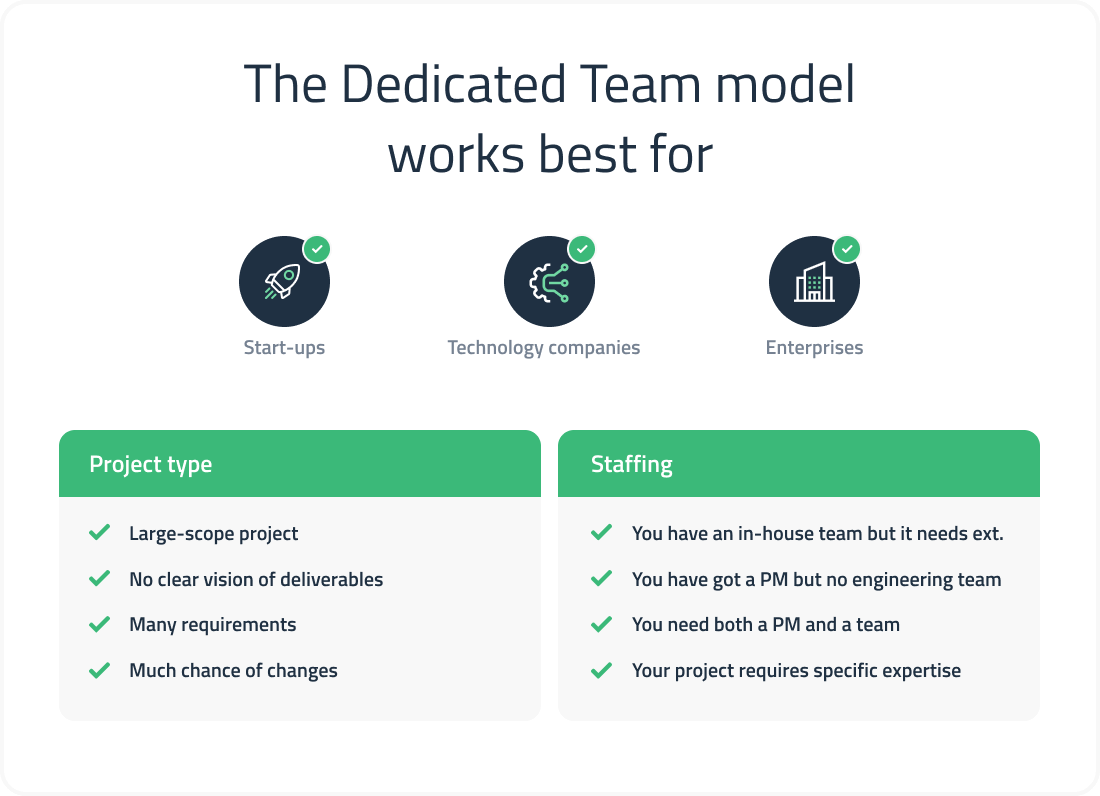

When is a dedicated team needed?

This form of collaboration gives you the maximum freedom. You get an exceptionally dedicated team that will not be distracted by other tasks. This is very effective in the IT business as a whole.

Fast-growing start-ups

If your project is designed to stay in continuous production to come and is planned to expand in the future, a dedicated team is the only reasonable option. You need to show rapid growth, and a dedicated software development team will allow you to instantly kick off a project without wasting effort on business-oriented tasks.

No outlined requirements

If your idea doesn’t fit with the product and market, and also needs research, this is what’s needed. Otherwise, you will waste too much time and effort on tests and surveys. A dedicated model would provide enough resources to avoid cost overruns, too.

Long-term project

To unleash the full product potential, you need a strong team of dedicated software development throughout the project completion. You must stay sure that the specialists you work with will stick with you to the end. This is exactly what this model guarantees.

Learn more about an offshore dedicated team model.

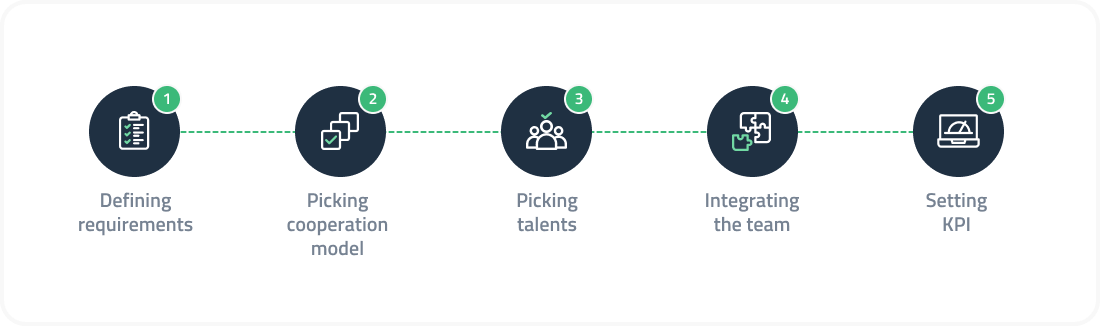

How to hire dedicated specialists

Here’s a brief guide to help you efficiently hire a dedicated development team.

Step 1. Defining requirements

Start with the following:

- Set goals and budget ahead of the time

- Describe the project, job descriptions, preferred team size, and workflow

- Determine your requirements for each element of the process

You must know from the very beginning what you want to get in the end so that you don’t have to go through extra difficulties with your dedicated team.

Step 2. Picking cooperation model

Decide if you need one holistic or multiple development teams for specific tasks. If your product is close to being ready, consider introducing an additional maintenance team.

Step 3. Selecting talents

If the dedicated team staff has the necessary specialists, you can start working immediately. If not, contact the professionals or the talent pool of partners. Assess experience, and technical and social skills and select suitable candidates for the final interview. You can use social networks or rating sites for search.

Step 4. Integrating the team

Seamlessly integrate the team with the project workflow. Choose your preferred approach and management tools. It is advisable to talk face-to-face with new professionals and determine regular channels of communication so you could monitor and control all the processes.

Step 5. Setting KPI

Choose key performance indicators that adequately assess the performance of dedicated team members. You don’t have to monitor managers around the clock. It is enough to calculate KPI at the project beginning and measure it at the end of the month. Also, you can change the team size without any worries at any time.

Types of dedicated software development teams

A dedicated team can usually help tackle all required project aspects:

- DevOps

- UX/UI design

- QA and testing

- R&D services

- IT consulting

- Software support and maintenance

Keep in mind that in terms of hiring a dedicated development team, you may get one or several professionals that specialize in a number of niches at once.

Best industries for using dedicated software development teams

Dedicated teams are hired to complete projects in any field:

- Transportation

- IT and telecommunications

- Robotics

- Medicine

- Banking and finance

- Cryptocurrency

- Trade, etc.

Such technological giants as Apple, IBM, American Express, Oracle, Amazon, and many others are known to have worked with dedicated teams.

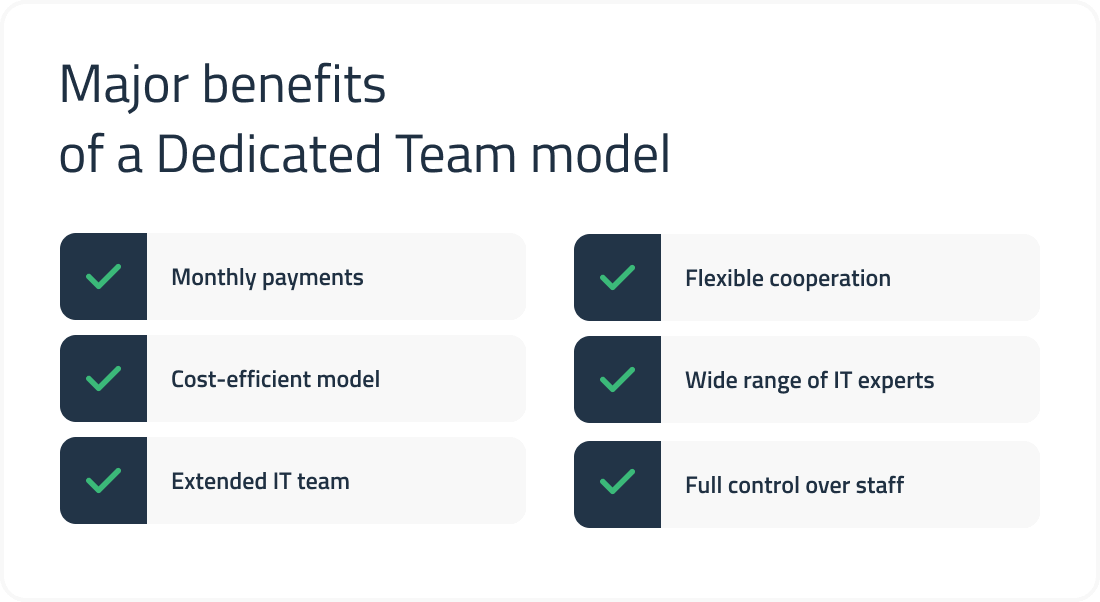

Benefits of a dedicated model

Stable budgeting

- Cost-efficient approach: Fewer expenses are required as opposed to the full-time staff

- Ability to fit into a limited budget: You get the best employees for the budget you have

- Cost optimization: You pay for the scope of work and actual hours needed to complete a task

Flexible resources

- Global partnership: You get access to the unlimited talent pool

- Complete provision: You collaborate with qualified personnel and all necessary software and hardware

- High scalability and flexibility: You can engage more people or reduce resources if required

- Remote work: You can save on workspace resources

All in all, you save time and effort, which you spend both on finding competent employees and on project implementation.

Transparent communication

- Weekly/monthly reports ─ regular communication (you can set any communication frequency you see fit)

- A variety of communication channels ─ strong and flexible relationships

- Easy collaboration ─ using dedicated apps like Trello, Slack, Jira, Basecamp, etc.

Social aspects solved

- Multicultural environment

- Cohesiveness ─ working as a team with many people having different backgrounds, cultures, and, work styles, allows you to acquire new skills

Reliable interaction and management

- Alignment with business strategy

- Rapid response to new challenges

- More control ─ you oversee the selection, management, and motivation of staff

- No need to describe specifications on your own

Simple scaling

- Structured workflow using agile practices

- Transparency and visibility of implementation

- Quick replacement of employees if necessary

- Full concentration on one project

- Fast development cycle

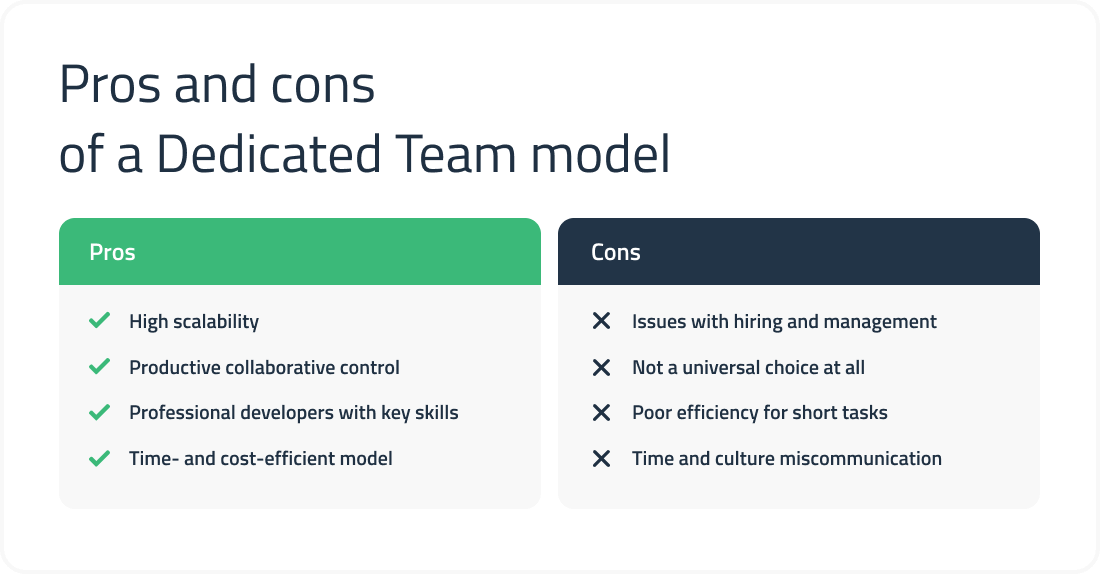

Pros and cons of a dedicated team

Advantages

High scalability. The idea of teams fits long-term projects with potential changes in scope, tasks, and other unique parts of the project. The thing is that a DDT can quickly adapt to changes, move on with the given project or switch to others. If needed, you can hire more devs to fill the gaps.

Productive collaborative control. Management is known as the trickiest part of remote work. But you get full control over external collaborators, similar to the existing internal branches. Professional offshore staff often helps with planning and supervision, plus they deliver fresh ideas.

Professional developers with key skills. Obviously, access to the global pool is really valuable for businesses. You can easily find the developers you need. Ruby on Rails? Kotlin? IoT? DevOps? Cloud? Blockchain? Outsourcing companies always can find the team of your dreams.

Time- and cost-efficient model. As a result, it leads to wonderful savings on salaries, office space, HR tasks, software and hardware, training, and so on. You can run processes day and night thanks to different time zones, too. And you can forget about the long and boring search for staff as outsourcing partners will do it.

Drawbacks

Issues with hiring and management. Some strengths may apparently turn into cons. If you want to get the best results, you will need an expert to cooperate with hiring companies. As well, you will need to spend more time observing foreign groups in comparison with in-house ones.

Not a universal choice at all. You want to consider expenses and potential ROI to understand whether it’s the right choice or not. In the next section, we will provide some suggestions. Still, it’s your homework to realize the suitability of DDTs. Remember that the described model isn’t a magic wand.

Poor efficiency for short tasks. Particularly, this model of cooperation rarely becomes profitable for projects with a length shorter than 12 months. You may consider hiring freelancers in this case, for example. A dedicated software development team is much better for long cooperation with unclear final date and changing requirements.

Time and culture miscommunications. Last but not least, offshore staff lives in different time zones and countries with a unique culture. You should take this aspect into account to manage teams in the best way. Avoid words and images that can hurt specific ethnic groups or religions and try to organize flexible development.

Dedicated software development vs in-house team

To help you pick the most optimal option for your individual project, let’s compare how exactly in-house vs dedicated teams work in the field.

In-house employees

Pros:

- Deep immersion and attention to every project detail

- Opportunity to educate a specialist within the company

- Fast interaction

- Savings (for large projects)

Cons:

- Complex HR and accounting aspects

- Higher operating and labor costs

- Lack of expertise

- Possible compatibility issues

- Long-term organization of the workflow from scratch

Dedicated team

Pros:

- Lower operating costs ─ no payroll and tax costs

- No recruitment issues

- No training is required — specialists are ready to jump in, and collaborative processes are well polished

Cons:

- No possibility to interfere in the processes

- Issues related to time zone difference

As you can see, the comparison is clearly not in favor of in-house staff. According to Statista, 35% of companies today tend to outsource IT services. At the same time, 18% of them rely on professional assistance, and 24% — on improving overall work efficiency.

Ultimate reasons to hire a dedicated team

Dedicated team collaboration works well in the following cases:

- The required expertise is lacking

- The project scope cannot be precisely defined, and the requirements may change along the way

- Quick MVP creation is required

- The finished product needs to be developed, improved, changed, or scaled

- A digital transformation or transition to a different technology stack is needed

Don’t make these mistakes

The level of expectation with dedicated teams is always very high, so you should avoid the following:

Overestimated qualification

Each dedicated employee must possess relevant experience and knowledge. Several years in the field of IT and the ability to use up-to-date technologies are a standard must. You need real experts. Delegating or combining powers will only harm the project.

Poor workflow organization

There should be a stable working relationship between the members of the new team, supported by:

- A single vision of tasks and goals

- Shared common values

- A common understanding of roles

Poor interaction

You should talk to the newly hired team as often as possible. Reveal to them the social importance, and purpose of the product and find a common understanding. Organize meetings more often, discuss work problems, etc.

Poor finance management

Don’t fall for cheap services. Otherwise, your product will not be expensive either. Choose professionals whose pay expectations match your standards. Try to offer favorable social and labor conditions.

Isolating the team

Give new employees the opportunity to communicate freely with your specialists. Teamwork will allow them to demonstrate their best qualities and compensate for the lack of skills everyone has.

Three life hacks to make dedicated team model work

In order to successfully introduce a dedicated development model, make sure to work on the following aspects:

Preliminary planning

Delegating work can be successful with a correct assessment of the project scope and a competent approach to compiling a list of necessary specialists. Before looking for a service provider, it is important to note the roles that would require outside experience. Your job openings should include a detailed description of the duties and the number of hours for each.

Smooth introduction

Assign tasks to new employees. Feel free to interview people to test their technical and social skills. Be sure to create an upfront contract that outlines your hiring expectations and desired outcomes. Note the required scope of the project and scaling conditions.

Include in the agreement the amount of salary and estimated costs for software development. Before signing a contract, you need to make sure that every employee, in-house or remote, is fully involved in the process.

Focus on results

Implement a product-centric organizational structure. To do this, form a strategic vision backed by resources. Provide a set of measures to stimulate employees and monitor the achievement of business goals.

You must maintain cross-functional communication in an environment where employees report directly to the project manager. Your structure will achieve high performance if all goals are aligned with the business strategy.

Cost of hiring a dedicated development team

According to the latest research, 78% of respondents use a hybrid employee model. At the same time, the key reason for choosing outsourcing and delegation of work is to reduce costs.

Many people think that it is much easier and cheaper to hire an efficient team for good than to introduce specialists based on particular needs on-demand. Indeed, in such a model, the hourly rate is the main item of expenditure. You do not pay for office rent, equipment, taxes, electricity bills, etc.

Not to overpay, you can use our table with the hourly wages of IT specialists in dollars by major regions.

| Region | Project Manager | Designer | Frontend Developer | Backend Developer |

|---|---|---|---|---|

| Eastern Europe | 20-40 | 35-60 | 30-80 | 40-90 |

| Western Europe | 30-70 | 45-100 | 55-100 | 65-105 |

| South America | <40 | <45 | 25-55 | 35-65 |

| Asia | <35 | <40 | <45 | <55 |

How to start working with a dedicated team

- Describe to the software development vendor the project scope, goals, and vision so that their business analysts understand how many people are needed exactly

- Discuss the findings of the discovery stage (business analysis) with the vendor. You should be aware of the scope, specification, project timeframe, roadmap, expected budget, team composition, and tech stack. etc.

- Meet with the team and clarify their roles and responsibilities, how access to development tools will be organized, and agree on project priorities

- Find the perfect match between specifications, technology stack, and motivation level. Your new specialists should not only understand the task but also be passionate about their work (well-motivated at least)

Best countries for hiring

You can find a suitable offshore dedicated development team in the most remote corner of the planet. The balance between competencies and cost is important. The most profitable in this regard are specialists from Eastern Europe, primarily from Ukraine and Poland, as well as India and Argentina.

By the way, the Ukrainian IT market, with more than 200 thousand specialists available at the moment, is one of the largest in the world (e.g., it has the largest number of C++ developers in Europe). And according to HackerRank, Ukrainian developers have an average score of 88.7%. In addition, hourly rates are lower than in the US and Europe. That is, it is the perfect match of price and quality. Other benefits of outsourcing:

- European time zone

- Similar work culture

You can quickly find the staff you need. The average hire time is one month.

The composition of a dedicated team: A closer look

A team usually includes software engineers and a project manager. A project manager is needed if you lack:

- Project management experience

- Specific development requirements

- Development lifecycle management expert

Below is a list of professionals relevant for a project of any complexity:

- Front-end developers. They are responsible for the appearance and functionality of the project: selection, installation, and testing of interface components.

- UI/UX designers. The area of responsibility here is the client-side of the interface, with an emphasis on the shape, shades, textures, and other visual and UX aspects of the product.

- Backend engineers. They create web services, APIs, data stores, and other logical entities on the server-side.

- DevOps engineers are responsible for coordinating all project operations and testing.

- QA engineers. They control the product’s quality before launch and identify errors and shortcomings.

- Business analysts. BAs define requirements, evaluate processes, and provide data-driven reports.

If you have these experts at your disposal, you certainly can complete any project.

Summary

A dedicated team is the best option for technology start-ups and fast-growing companies, as well as projects with many vague requirements. For enterprises seeking long-term cooperation, this is the best choice in terms of cost and professional level of staff.

Your particular need for a dedicated team is a very individual question. But if you are not completely confident in your abilities, feel free to contact us. We will help you quickly select specialists that fully meet your ultimate goals for a reasonable price.

FAQ

What does a development team do?

A development team helps find the product-market fit, creates UX and UI design, writes code, and provides quality control and product maintenance. Among the key functions are project and software planning, testing, analysis, and programming throughout the project.

How to choose a dedicated development team?

Learn as much information about the software vendor as possible. Here are some critical things to check: firstly, the company’s ranking; secondly, the vendor’s website; thirdly, portfolio and customer reviews.

How to hire a dedicated software development team?

First off, find an outsourcing provider offering dedicated team service. Contact them and describe the requirements of the project. Determine the terms of cooperation and interview potential team members. Draw up a contract.